Written by Juliet Mumby-Croft, Director

It’s an accepted principle that the structure of a cost-effectiveness model should reflect the natural history of disease. Further, that it should capture the differences in outcome, cost and resource use expected to result from the use of a new treatment versus current standard of care (SoC). In practice, there can be a tendency to interpret the second of these guiding principles as “include everything”. That is to include almost every clinical outcome, resource, and cost associated with the treatment of the disease.

This tendency may be driven by a fear of omitting something which does affect cost-effectiveness, which by it absence renders the model invalid or at least perhaps biased in the eyes of a reviewer. It may also be, that with input from clinical experts during model design, that variables which are important from a clinic standpoint are included. However, if considered from the health economic perspective might reasonably be excluded.

Our job as health economists is to guide decision making, to estimate the likely impact of treatment on health outcomes, resource use, costs and cost-effectiveness; to diminish the uncertainty associated with the decision and not to add to it (if we can help it). The more inputs a cost-effectiveness model includes, the more complex and less accessible it can become; it can become harder to follow cause and effect, harder to validate. In essence, complexity can add to model uncertainty and unnecessary complexity can unnecessarily add to uncertainty.

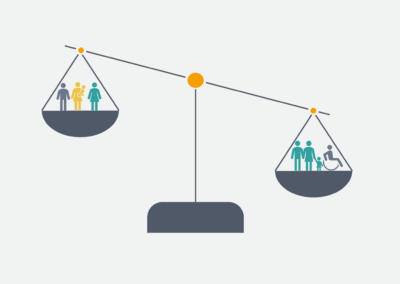

A good starting place is to determine the crux of the economic case. We start by listing how the new intervention might impact quality of life and/or quality of life and healthcare resource use. This takes the list of every disease dynamic, outcome, and resource down to a list of the differences between what we are doing now and the new intervention.

For each possible difference, we then determine with clinician input, whether the differences are clinically realisable and meaningful, and secondly whether we have evidence of this difference. Differences that are not clinically realisable are likely to be omitted from the model. For example, a new antibiotic might clear a hospital-acquired infection more quickly than SoC but may not reduce length of stay if patients remain in hospital with their underlying condition. Outcomes, with a small clinical impact, may be considered for exclusion. For example, a mild, transient rash which requires no treatment and affects only a small proportion of patients. Finally, differences for which we have no data, for example, improvements in adherence associated with a once a day tablet, need careful thought and may best be included within a scenario or threshold analysis.

Only once we have distilled our list of possible impacts to those which are clinically realisable and meaningful, and for which we have data, can we start to formulate our model methodology.